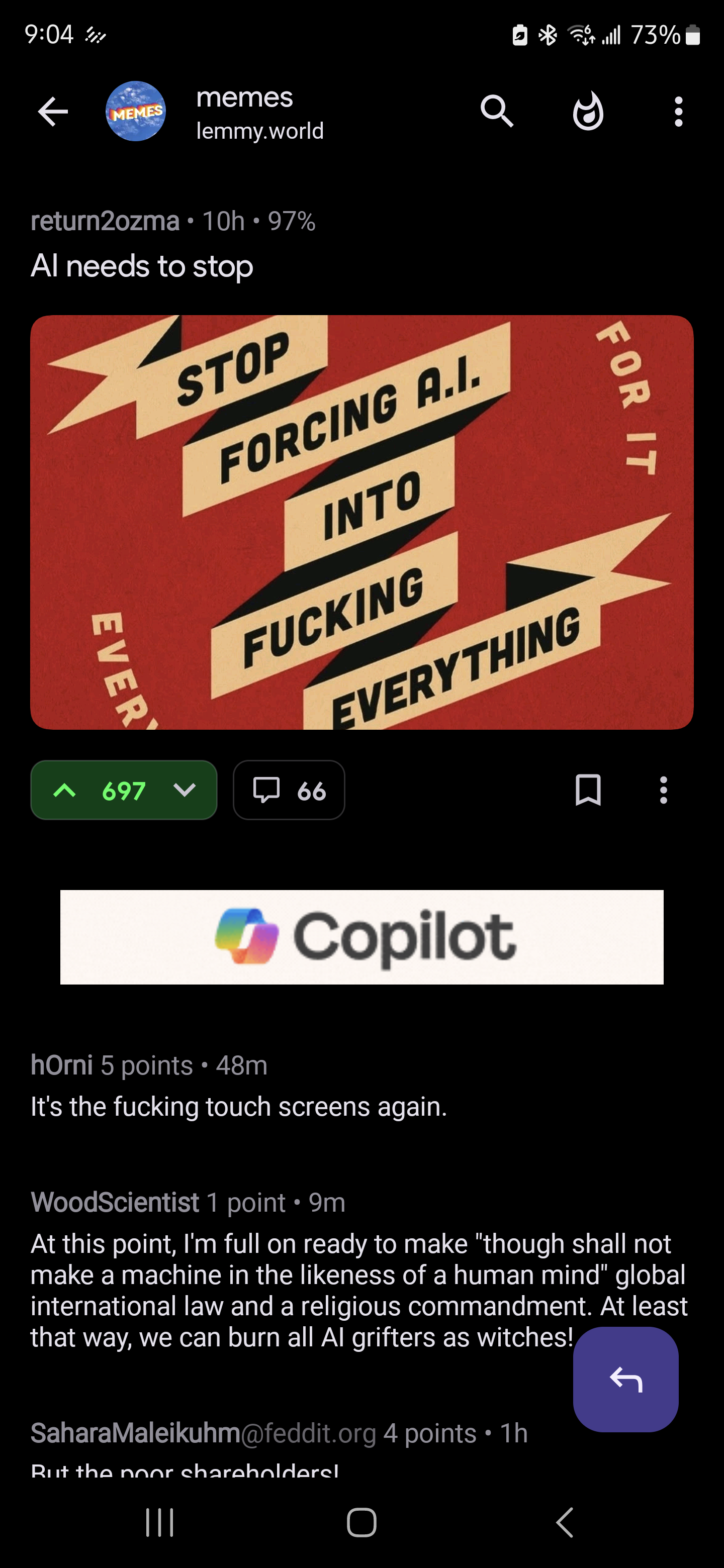

I hated it when everything became ‘smart’.

Now everything has ‘AI’.

Nothing was smart. And that’s not AI.

Everything costs more, everything has a stupid app that gets abandoned, IoT backend that’s on life support the moment it was turned on. Subscriptions everywhere! Everything is built with lower quality, lower standards.

Get into self hosting.

Smart everything without subscriptions. And with you in control.

My home is already plenty dumb enough without me exerting that level of control.

they don’t care. you’re not the audience. the tech industry lives on hype. now it’s ai because before that they did it with nft and that failed. and crypto failed. tech needs a grift going to keep investors investing. when the bubble bursts again they’ll come up with some other bullshit grift because making useful things is hard work.

deleted by creator

deleted by creator

deleted by creator

Last year it was APIs

Hahaha the inane shit you can read on this website

deleted by creator

I was ok with crypto and nft because it was up to me to decide if I want to get involved in it or not.

AI does seem to have impact at jobs, at least employers are trying to use it and see if it actually will allow them to hire less staff, I see that for SWE. I don’t think AI will do much there though.

it’s not up to you, it just failed before it could be implemented. many publishers already commit to in-game nfts before they had to back down because it fell apart too quickly (and some still haven’t). if it held on for just a couple more years there wouldn’t be a single aaa title that doesn’t have nfts today.

crypto was more complicated because unlike these two you can’t just add it and say “here, this is all crypto now” because it requires prior commitment and it’s way too complicated for the average person. plus it doesn’t have much benefit: people already give you money and buy fake coins anyway.

I’m giving examples from games because it’s the most exploitative market but these would also seep into other apps and services if not for the hurdles and failures. so now we’re stuck with this. everyone’s doing it because it’s a gold rush except instead of gold it’s literal shit, and instead of a rush it’s literal shit.

— tangent —

… and just today I realized I had to switch back to Google assistant because literally the only thing gemini can do is talk back to me, but it can’t do anything useful, including the simplest shit like converting currency.

“I’m sorry, I’m still learning” – why, bitch? why don’t you already know this? what good are you if I ask you to do something for convenience and instead you tell me to do it manually and start explaining how I can do the most basic shit that you can’t do as if I’m the fucking idiot.

Nft didn’t fail, it was just the idiotic selling of jogs for obscene amounts that crashed (most of that was likely money laundering anyway). The tech still has a use.

Wouldn’t exactly call crypto a failure, either, when we’re in the midst of another bull run.

when we’re in the midst of another bull run.

Oh, that’s nice. So, who’s money are you rug pulling?

Words have meaning, let’s use them properly, okay?

Somebody has to hold the bag. I don’t think this is functionally different.

The problem isn’t AI. The problem is greedy, clueless management pushing half baked products of dubious value on consumers.

So… the AI?

If only it were just AI…

Natural unintelligence

You can use the warhammer 40k nomenclature of abominable intelligence. I’m not a gaming nerd but find it fitting for fancy statistics in a trench coat.

And dubious ethical origin.

And environmental cost.

Yes but now they have a tool…

I kind of like AI, sorry.

But it should all be freely available & completely open sourced since they were all built with our collective knowledge. The crass commercialization/hoarding is what’s gross.

I like what we could be doing with AI.

For example there’s one AI that I read about awhile back that was given data sets on all the known human diseases and the medications that are used to treat them.

Then it was given data sets of all the known chemical compounds(or something like that, can’t remember the exact wording)

Then it was used to find new potential treatments for diseases. Like new antibiotics. Basically it gives medical researchers leads to follow.

That’s fucking cool and beneficial to everyone. It’s a wonderful application of the tech. Do more of that please.

What you are talking about is machine learning which is called AI. What the post is talking about is LLMs which are also called AI.

AI by definition means anything that exhibits intelligent behavior and it is not natural in nature.

So when you use GMaps to find the shortest path between 2 points that’s also AI (specifically called local search).

It is pointless to argue/discuss AI if nobody even know which type they are specifically talking about.

The issue is, people tend to overgeneralize and also get averted when some buzzword is repeated too much.

So, this negatively affects the entire field of any AI.

I’m talking about AI in the context of this conversation.

I’m sorry it upsets you that capitalism has yet again redefined another word to sell us something else, but nobody here is specifically responsible for the language we’ve been given to talk about LLMs.

Perhaps writing to Mirriam Webster about the issue could reap the results you’re looking for.

LLMs are an instance of AI. There are many. Typically, the newest promising one is what the media will refer to as “AI” because the media don’t do subtlety.

There was a time when expert systems were the one thing the media considered to be AI (and were overhyped to the point of articles wondering if they’d make jobs like general practitioners obsolete). Now it’s generational neural nets. In twenty years it’ll be something else.

Yes! So much better statement.

Yeah. I’ve been interested in AI for most of my life. I’ve followed AI developments, and tinkered with a lot of AI stuff myself. I was pretty excited when ChatGPT first launched… but that excitement turned very sour after about a month.

I hate what the world has become. Money corrupts everything. We get the cheapest most exploitative version of every possible idea, and when it comes to AI - that’s pretty big net negative on the world.

I mean you’re technically correct from a copyright standpoint since it would be easier to claim fair use for non-commercial research purposes. And bots built for one’s own amusement with open-source tools are way less concerning to me than black-box commercial chatbots that purport to contain “facts” when they are known to contain errors and biases, not to mention vast amounts of stolen copyrighted creative work. But even non-commercial generative AI has to reckon with it’s failure to recognize “data dignity”, that is, the right of individuals to control how data generated by their online activities is shared and used… virtually nobody except maybe Jaron Lanier and the folks behind Brave are even thinking about this issue, but it’s at the core of why people really hate AI.

You had me in the first half, but then you lost me in the second half with the claim of stolen material. There is no such material inside the AI, just the ideas that can be extracted from such material. People hate their ideas being taken by others but this happens all the time, even by the people that claim that is why they do not like AI. It’s somewhat of a rite of passage for your work to become so liked by others that they take your ideas, and every artist or creative person at that point has to swallow the tough pill that their ideas are not their property, even when their way of expressing them is. The alternative would be dystopian since the same companies we all hate, that abuse current genAI as well, would hold the rights to every idea possible.

If you publicize your work, your ideas being ripped from it is an inevitability. People learn from the works they see and some of them try to understand why certain works are so interesting, extracting the ideas that do just that, and that is what AI does as well. If you hate AI for this, you must also hate pretty much all creative people for doing the exact same thing. There’s even a famous quote for that before AI was even a thing. “Good artists copy, great artists steal.”

I’d argue that the abuse of AI to (consider) replacing artists and other working creatives, spreading of misinformation, simplifying of scams, wasting of resources by using AI where it doesn’t belong, and any other unethical means to use AI are far worse than it tapping into the same freedom we all already enjoy. People actually using AI for good means will not be pumping out cheap AI slop, but are instead weaving it into their process to the point it is not even clear AI was used at the end. They are not the same and should not be confused.

a rite of passage for your work to become so liked by others that they take your ideas,

ChatGPT is not a person.

People learn from the works they see […] and that is what AI does as well.

ChatGPT is not a person.

It’s actually really easy: we can say that chatgpt, which is not a person, is also not an artist, and thus cannot make art.

The mathematical trick of putting real images into a blender and then outputting a Legally Distinct™ one does not absolve the system of its source material.

but are instead weaving it into their process to the point it is not even clear AI was used at the end.

The only examples of AI in media that I like are ones that make it painfully obvious what they’re doing.

Was shopping for a laundry machine for my parents and LG, I shit you not, has an AI laundry machine now. I just can’t even

The reassuring thing is that AI actually makes sense in a washing machine. Generative AI doesn’t, but that’s not what they use. AI includes learning models of different sorts. Rolling the drum a few times to get a feel for weight, and using a light sensor to check water clarity after the first time water is added lets it go “that’s a decent amount of not super dirty clothes, so I need to add more water, a little less soap, and a longer spin cycle”.

They’re definitely jumping on the marketing train, but problems like that do fall under AI.

The thing is, we’ve had that sort of capability for a long time now, we called them algorithms. Rebranding it as ai is pure marketing bullshit

Well that’s sort of my point. It’s an algorithm, or set of techniques for making one, that’s been around since the 50s. Being around for a long time doesn’t make it not part of the field of AI.

The field of AI has a long history of the fruits of their research being called “not AI” as soon as it finds practical applications.

The system is taking measurements of its problem area. It’s then altering its behavior to produce a more optimal result given those measurements. That’s what intelligence is. It’s far from the most clever intelligence, and it doesn’t engage in reason or have the ability to learn.

In the last iteration of the AI marketing cycle companies explicitly stopped calling things AI even when it was. Much like how in the next 5-10 years or so we won’t label anything from this generation “AI”, even if something is explicitly using the techniques in a manner that makes sense.

No, problems like “how dirty is this water” do not fall under AI. It’s a pretty simple variable of the type software has been dealing with since forever.

Wouldn’t you know, AI has also been algorithmically based and around since the 1950s?

AI as a field isn’t just neural networks and GPUs invented in the last decade. It includes a lot of stuff we now consider pretty commonplace.

Using some simple variables to measure a few continuous values to make decisions about soap quantity, water to dispense, and the number of rinse cycles is pretty much a text book example of classical AI. Environmental perception and changing actions to maximize the quality of its task outcome.

Respectfully, there’s no universe in which any type of AI could possibly benefit a load of laundry in any way. I genuinely pity anyone who falls for such a ridiculous and obvious scam

I would say that when the intelligent washing machine has access to sensors (weight, hardness of water, types of laundry detergents) and actuators (releasing the right amount of detergents, water, spin to the barrel) it could make an optimal washing of laundry.

I would counter that non-optimal washing by doing what I ask via primitive buttons and dials is perfectly acceptable, and actually preferable

That’s a different argument entirely from “no possible benefit”, though

Good for you. You might also be interested in this tool called a “washtub” that lets you do everything exactly how you want, without needing to trust a computer to interpret the positions of fancy dials and figure out how much to agitate your socks.

No, it couldn’t. That’s pure tech bro logic without any basis whatsoever in reality.

The machines already have these sensors. There’s simply nothing for “intelligence” to contribute to the process. It’s not enough for you to point to the presence of various sensors and claim it could do something with them when in reality this is already a solved problem. Additionally, the hypothetical AI-equipped machine itself will also be worse, using significantly more energy and being less reliable.

I say hypothetical, because the specific LG machine we’re talking about doesn’t even actually have any AI component. Yes I am aware of the difference between generative and analytical models; it has neither. Just normal sensors and algorithms that all modern washing machines have had for years. They threw the “AI” language on it to market it to people. You know, like a scam. Because the delightful thing about “AI” is you don’t need to provide any benefit to your marks, their imagination will do the work for you

I love it when people angrily declare that something AI researchers figured out in the 60s can’t be AI because it involves algorithms.

Using an algorithm to take a set of continuous input variables and map them to a set of continuous output variables in a way that maximizes result quality is an AI algorithm, even if it’s using a precomputed lookup table.

AI has been a field since the 1950s. Not every technique for measuring the environment and acting on it needs to be some advanced deep learning model for it to be a product of AI research.

Then they may as well say they did it “with computers.”

Oh, but that’s not sexy, is it.I mean, no, it isn’t. It is a marketing decision after all.

That doesn’t mean that type of thing isn’t the product of AI research.

so that specific LG machine can detect the water hardness, what fabrics are used in the clothes it should launder, what detergents are available?

Do you have an example of an AI system being deployed to do these things or is it, as I said, pure hypothetical tech bro logic?

But yeah it basically squirts some water in at the top, then analyzes the water that reaches the bottom (and how much) to infer the fabric types. That same information is then considered when dispensing detergent and fabric softener. Simple sensors and tables

that’s pretty cool then.

You can’t see a benefit to a washing machine that can wash clothes without you needing to figure out how much soap to add or how many rinse cycles it needs?

I genuinely pity anyone so influenced by marketing that they can’t look at what a feature actually does before deciding they hate it.

Those features are literally unrelated to AI, just so you know. It’s comparing sensor outputs to a table. Like all modern laundry machines. The inclusion of “AI” on the label is purely to take advantage of people like you who instantly believe whatever they’re told, even of it’s as outlandish as “your laundry has been optimized” lol

Yeah, I know how it works, and I also know how different types of AI work.

It’s a field from the 50s concerned with making systems that perceive their environment and change how they execute their tasks based on those perceptions to maximize the fulfillment of their task.

Yes, all modern laundry machines utilize AI techniques involving interpolation of sensor readings into a lookup table to pick wash parameters more intelligently.

You’ve let sci-fi notions of what AI is get you mad at a marketing department for realizing that we’re back to being able to label AI stuff correctly.

The fact that you’ve been reduced to blabbering about such mundane things in the style of “the ghosts in pac-man technically had AI” tells us everything we need to know here. Have fun arguing with me in the shower about whether or not current trends are just a result of marketing executives finally being liberated to appropriately label the AI they’ve been using for 70 years

Cool story bro. Keep being angry about the meaning of words I guess, if it makes you happy.

I love these technical discussions. Just kicking each other in the nuts over and over.

It’s probably just a sticker they put over the word “smart”

My parents got a new washer and dryer and they are wifi enabled. Why tf do they need to be wifi enabled? It won’t move the laundry from the washer to the dryer, so it’s not like you can set the laundry and then go about your errands and come home to dry clothes ready to be folded

Honestly I find this feature of my washer/dryer super-useful because it reminds me to turn the stuff over instead of forgetting and letting it sit in the washer getting midlewy

Like others mentioned this one actually makes sense. Letting you know your washing is done so you can move it to the drier and letting you know its dry already so you can fold it is actually super helpful. I studied at an uni that had a connected laundry room so I didnt have to go all the way there to check if the machine was done with my laundry.

It already sings a song for 30 seconds when the load is done. I understand a notification at a laundromat, but what good is that really in your home?

Some people have their laundry machines far away, some people wear headphones while waiting, there are many reasons a notification helps

A notification that your load is done is actually convenient. It’s typically also paired with some sensors that can let you know if you need more detergent or to run a cleaning cycle on the washer.

Mine also lets you set the wash parameters via the app if you want, which is helpful for people who benefit from the accessibility features of the phone. Difficult to adjust the font size or contrast on a washing machine, or hear it’s chime if you have hearing problems.Actually this one is sensible.

In the near future as more renewable energy is included in power grids the price of power will fluctuate depending on the weather.

The WiFi connection will allow you to configure your washing to be done when pricing reaches whatever point.

Ah yes, please fire up the washing machine at 3am and scare the fuck out of everybody. And then let the clothes sit in there wet so that when you wake up, they smell like mildew

Everything else aside, you need to clean your washing machine. Cloths shouldn’t be smelling like mildew after less than a day in it.

Modern washing machines are also pretty quiet.

Everything else aside, you need to clean your washing machine. Cloths shouldn’t be smelling like mildew after less than a day in it.

A bit of exaggeration to make a point. :)

Modern washing machines are also pretty quiet.

Not when they’re a room away from the master bedroom. Having it start up in the middle of the night would be either annoying, terrifying, or both.

Fair enough. You’d be surprised how many people don’t know you need clean them occasionally and think it’s normal for stuff to go terribly wrong really quickly. :)

I got a new washer relatively recently and it’s quiet enough that it’s not really audible from the next room unless you tell it to do a really aggressive spin cycle with a big load.

In any case, I think the point of the timed wash features are to make it so your laundry finishes Right when you get home rather than overnight.

I mean, they have Alexa connected refrigerators with a camera inside the fridge that sees what you put in it and how much, to either let you know when you’re running low on something or ask to put in an order for more of that item before you run out, or tell you if something in there is about to spoil, or if the fridge needs cleaned, so I imagine a washer would do something similar?

Instructions uclear. AI in healthcare treatment decisions.

Train, backpropogate, optimize.

I got a christmas card from my company. As a part of the christmas greeting, they promoted AI, something to the extent of “We wish you a merry christmas, much like the growth of AI technologies within our company” or something like that.

Please no.

That’s so fucking weird wtf. Do you work for Elon Musk or something lmao

I work as a dev in an IT consulting company. My work includes zero AI development, but other parts of ghe company are embracing it.

Same, and same…

LMAO

It’s the fucking touch screens again.

Do you remember the original touch screens? They were pressure based and suuuuuuuuucked.

Not as bad as the IR touch screens. They had a IR field protected just above the surface of the screen that would be broken by your finger, such would register a touch at that location.

Or a fly landing on your screen and walking a few steps could drag a file into the recycle bin.

Speaking from experience?

Not that specific one, but I did have a fly fuck up a selection in photoshop a few times before I just unplugged the USB connection to the monitor.

But then we wouldn’t have to pay real artists for real art anymore, and we could finally just let them starve to death!

In film school (25 years ago), there was a lot of discussion around whether or not commerce was antithetical to art. I think it’s pretty clear now that it is. As commercial media leans more on AI, I hope the silver lining will be a modern Renaissance of art as (meaningful but unprofitable) creative expression.

If your motives are profit, you can draw furry porn or get a real job.

Eh, I’ve made a decent living making commercials and corpo stuff. But not for lack of trying to get paid for art. For all the money I made working on ~50 short films and a handful of features, I could maybe buy dinner. Just like in the music industry, distributors pocket most of the profit.

Art seems like a side hussle or a hobby not a main job. I can’t think of a faster way to hate your own passion.

I wanted to work as a programmer but getting a degree tought me I’m too poor to do it as a job as I need 6 more papers and to know the language for longer than it existed to even interview to earn the grind. Having fun building a stupid side project to bother my friends though.

Exactly. I can code and make a simple game app. If it gets some downloads, maybe pulls in a little money, I’m happy. But I’m not gonna produce endless mtx and ad-infested shovelware to make shareholders and investors happy. I also own a 3D printer. I’ve done a few projects with it and I was happy to do them, I’ve even taken commissions to model and print some things, but it’s not my main job as there’s no way I could afford to sit at home and just print things out all month.

My only side hussle worthy skill is fixing computers and I rather swallow a hot soldering iron than meet a stranger and get money involved.

Strangely, that is a lot of who is complaining. It was a Faustian bargin: draw furry porn and earn money but never be allowed to use your art in a professional sense ever again.

Then AI art came and replaced them, so it became loose-loose.

I don’t know where else you could find enough work to sustain yourself other than furry porn and hentai before Ai. Post Ai, even that is gone.

Yiff in hell furf-

Wait, what

Issue is, that 8 hours people spend in “real” jobs are a big hindrance, and could be spent on doing the art instead, and most of those ghouls now want us to do overtime for the very basics. Worst case scenario, it’ll be a creativity drought, with idea guys taking up the place of real artists by using generative AI. Best case scenario is AI boom totally collapsing, all commercial models become expensive to use. Seeing where the next Trump administration will take us, it’s second gilded age + heavy censorship + potential deregulation around AI.

You are overexaggerating under assumption that there will exist social and economic system based on greed and death threats, which sounds very unreali-- Right, capitalism.

But, but, but they want to sell as much of it as they can before the bubble bursts.

It’s worse. The industry needs to entrench LLM and other AI so that after the bubble bursts it’s so grafted info everything can’t easily be removed, so afterwards everybody still needs to go past them and pay a buck.

Basically it’s like a tick that needs to dig in deep now so you can’t get rid of it later.

Relevant ad.

My thermostat hides no brainier features behind an “Ai” subscription. Switching off the heating when the weather will be warm that day doesn’t need Ai… that’s not even machine learning, that’s a simple PID controller.

I’m so glad I switched to just home assistant and zigbee devices, and my radiators are dumb, so I could replace them with zigbee ones. Fuck making everything “smart” a subscription

I think I will try ESP-Home, half of my appliances are Tasmota-based now, I just was too lazy to research compatible Thermostats… (Painful hindsight)…

Even the supposed efficiency benefits of the nest basically come down to “if you leave the house and forget to turn the air down, we will do it for you automatically”

deleted by creator

AI is one of the most powerful tools available today, and as a heavy user, I’ve seen firsthand how transformative it can be. However, there’s a trend right now where companies are trying to force AI into everything, assuming they know the best way for you to use it. They’re focused on marketing to those who either aren’t using AI at all or are using it ineffectively, promising solutions that often fall short in practice.

Here’s the truth: the real magic of AI doesn’t come from adopting prepackaged solutions. It comes when you take the time to develop your own use cases, tailored to the unique problems you want to solve. AI isn’t a one-size-fits-all tool; its strength lies in its adaptability. When you shift your mindset from waiting for a product to deliver results to creatively using AI to tackle your specific challenges, it stops being just another tool and becomes genuinely life-changing.

So, don’t get caught up in the hype or promises of marketing tags. Start experimenting, learning, and building solutions that work for you. That’s when AI truly reaches its full potential.

I think there’s specific industrial problems for which AI is indeed transformative.

Just one example that I’m aware of is the AI-accelerated nazca lines survey that revealed many more geoglyphs that we were not previously aware of.

However, this type of use case just isn’t relevant to most people who’s reliance on LLMs is “write an email to a client saying xyz” or “summarise this email that someone sent to me”.

One of my favorite examples is “smart paste”. Got separate address information fields? (City, state, zip etc) Have the user copy the full address, clock “Smart paste”, feed the clipboard to an LLM with a prompt to transform it into the data your form needs. Absolutely game-changing imho.

Or data ingestion from email - many of my customers get emails from their customers that have instructions in them that someone at the company has to convert into form fields in the app. Instead, we provide an email address (some-company-inbound@ myapp.domain) and we feed the incoming emails into an LLM, ask it to extract any details it can (number of copies, post process, page numbers, etc) and have that auto fill into fields for the customer to review before approving the incoming details.

So many incredibly powerful use-cases and folks are doing wasteful and pointless things with them.

If I’m brutally honest, I don’t find these use cases very compelling.

Separate fields for addresses could be easily solved without an LLM. The only reason there isn’t already a common solution is that it just isn’t that much of a problem.

Data ingestion from email will never be as efficient and accurate as simply having a customer fill out a form directly.

These things might make someone mildly more efficient at their job, but given the resources required for LLMs is it really worth it?

Well, the address one was an example. Smart paste is useful for more than just addresses - Think non-standard data formats where a customer provided janky data and it needs wrangling. Happens often enough and with unique enough data that an LLM is going to be better than a bespoke algo.

The email one though? We absolutely have dedicated forms, but that doesn’t stop end users from sending emails to our customer anyway - The email ingestion via LLM is so our customer can just have their front desk folks forward the email in and have it make a best guess to save some time. When the customer is a huge shop that handles thousands of incoming jobs per day, the small value adds here and there add up to quite the savings for them (and thus, value we offer).

Given we run the LLMs on low power machines in-house … Yeah they’re worth it.

Yeah, still not convinced.

I work in a field which is not dissimilar. Teaching customers to email you their requirements so your LLM can have a go at filling out the form just seems ludicrous to me.

Additionally, the models you’re using require stupid amounts of power to produce so that you can run them on low power machines.

Anyhow, neither of us is going to change our minds without actual data which neither of us have. Who knows, a decade from now I might be forwarding client emails to an LLM so it can fill out a form for me, at which time I’ll know I was wrong.

That’s really neat, thanks for sharing that example.

In my field (biochemistry), there are also quite a few truly awesome use cases for LLMs and other machine learning stuff, but I have been dismayed by how the hype train on AI stuff has been working. Mainly, I just worry that the overhyped nonsense will drown out the legitimately useful stuff, and that the useful stuff may struggle to get coverage/funding once the hype has burnt everyone out.

I suspect that this is “grumpy old man” type thinking, but my concern is the loss of fundamental skills.

As an example, like many other people I’ve spent the last few decades developing written communication skills, emailing clients regarding complex topics. Communication requires not only an understanding of the subject, but an understanding of the recipient’s circumstances, and the likelihood of the thoughts and actions that may arise as a result.

Over the last year or so I’ve noticed my assistants using LLMs to draft emails with deleterious results. This use in many cases reduces my thinking feeling experienced and trained assistant to an automaton regurgitating words from publicly available references. The usual response to this concern is that my assistants are using the tool incorrectly, which is certainly the case, but my argument is that the use of the tool precludes the expenditure of the requisite time and effort to really learn.

Perhaps this is a kind of circular argument, like why do kids need to learn handwriting when nothing needs to be handwritten.

It does seem as though we’re on a trajectory towards stupider professional services though, where my bot emails your bot who replies and after n iterations maybe they’ve figured it out.

Oh yeah, I’m pretty worried about that from what I’ve seen in biochemistry undergraduate students. I was already concerned about how little structured support in writing science students receive, and I’m seeing a lot of over reliance on chatGPT.

With emails and the like, I find that I struggle with the pressure of a blank page/screen, so rewriting a mediocre draft is immensely helpful, but that strategy is only viable if you’re prepared to go in and do some heavy editing. If it were a case of people honing their editing skills, then that might not be so bad, but I have been seeing lots of output that has the unmistakable chatGPT tone.

In short, I think it is definitely “grumpy old man” thinking, but that doesn’t mean it’s not valid (I say this as someone who is probably too young to be a grumpy old crone yet)

I think of AI like I do apps: every company thinks they need an app now instead of just a website. They don’t, but they’ll sure as hell pay someone to develop an app that serves as a walled garden front end for their website. Most companies don’t need AI for anything, and as you said: they are shoehorning it in anywhere they can without regard to whether it is effective or not.

But the poor shareholders!

Containerize everything!

Crypto everything!

NFT everything!

Metaverse everything!

This too shall pass.

Docker: 😢

Docker is only useful in that many scenarios. Nowadays people make basic binaries like

tarinto a container, stating that it’s a platform agnostic solution. Sometimes some people are just incompetent and only knowdocker pullas the only solution.Docker have many benefits - container meaning it can be more secure, easy to update and something that many overlook - a dockerfile with detailed intrusions on how to install that actually works if the container works - useful when wiki is not updated.

Another benefit is that the application owner can change infrastructure used without the user actually need to care. Example - Pihole v5 is backend dns + lighthttp for web + php in one single container. In version v6(beta) they have removed lighthttp and php and built in functionality into the core service. In my tests it went from 100 MB ram usage to 20 MB. They also changed the base from debian to alpine and the image size shrink a lot.

Next benefit - I am moving from x86 to arm for my home server. Docker itself will figure out what is the right architecture and pull that image.

Sure - Ansible exist as one attempt to combat the problem of installation instructions but is not as popular and thus the community is smaller. They may leave you in a bad state(it is not like containers were you can delete and start over fresh easily) Then we have VM:s - but IMO they waste to many resources.

LXC – natively containerize an application (or multiple)

systemd-run – can natively limit CPU shares and RAM usage

What’s wrong with containers?

I think the complaint is that apps are being designed with containerization in mind when they don’t need it

Any examples spring to mind? I’ve built apps that are only distributed as containers (because for their specific purpose it made sense and I am also the operator of the service), but if ya don’t want to run it in a container… just follow the Dockerfile’s “instructions” to package the app yourself? I’m sure I could come up with a contrived example where that would be impractical, but in almost every case a container app is just a basic install script written for a standard distro and therefore easily translatable to something else.

FOSS developers don’t owe you a pre-packaged

.deb. If you think distributing one would be useful, read up on debhelper. But as someone who’s done both,Dockerfileis certainly much easier thandebhelper. So “don’t need it” is a statement that only favors native packaging from the user’s perspective, not the maintainer. Can’t really fault a FOSS developer for doing the bare minimum when packaging an app.also! it’s worth noting that not all FOSS developers are debian (or even linux) devs. Developers of open source projects including .Net Core don’t “owe” us packaging of any kind but the topic here is unnecessary containerization, not a social contract to provide it.

I am not the person who posted the original comment so this is speculation, but when they criticized “containerizing everything” I suspect they meant “Yes client, I can build that app for you, and even though your app doesn’t need it I’m going to spend additional time containerizing it so that I can bill you more” but again you’d have to ask them.

Does “doesn’t need it” mean “wouldn’t be improved by it”? Examples?

“Yes, client! I can build that app for you! I’m going to bill you these extra items for containerization so I can get paid more”

Not sure about that hot take. Containers are here for the long run.

Put a curved screen on everything, microwave your thanksgiving turkey, put EVERYTHING including hot dogs, ham, and olives in gelatin. Only useful things will have AI in them in the future and I have a hard time convincing the hardcore anti-ai crowd of that.

Don’t forget microservices!

At this point, I’m full on ready to make “though shall not make a machine in the likeness of a human mind” global international law and a religious commandment. At least that way, we can burn all AI grifters as witches!